GitHub Repository

We publish selected software packages via GitHub. You can find our most recent developments on https://github.com/DFKI-Interactive-Machine-Learning/

Multimodal Multisensor Activity Annotation and Recording Tool

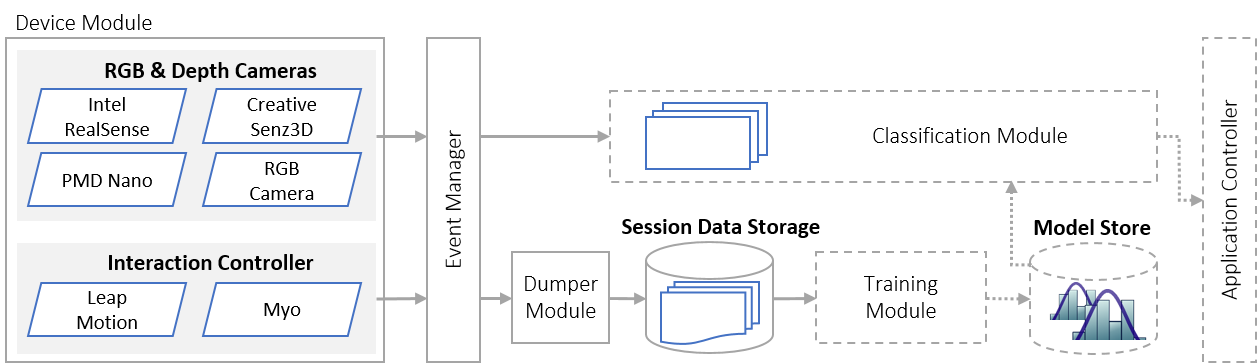

Wearable and ubiquitous sensor systems provide physiological data that can be embedded into intelligent user interfaces. Including data should enhance humans and computers such that interaction experience is improved. We provide a tool for recording and one for annotating multimodal data from multiple sensors under an open source license.

Recording | MMART

The Multimodal Multisensor Activity Recording Tool (MMART) supports common 2D/3D cameras and interaction devices (Myo, Leap Motion) to be synchronously recorded. You can find the source code and further instructions on Github.

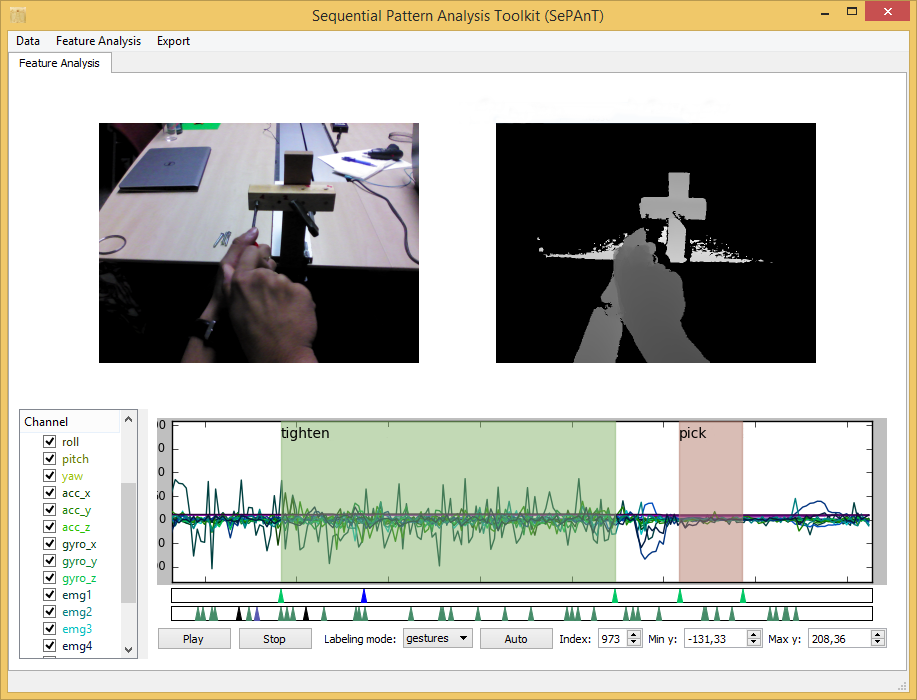

Annotating | MMAAT

The data format is shared with our Multimodal Multisensor Activity Annotation Tool (MMAAT) that allows for intuitive labeling of the data providing multiple viewports for visualizing data. The resulting supervised data can be exported for machine learning purposes. You can find the source code and further instructions on Github.

Citation

If you use our software please cite our corresponding publication.

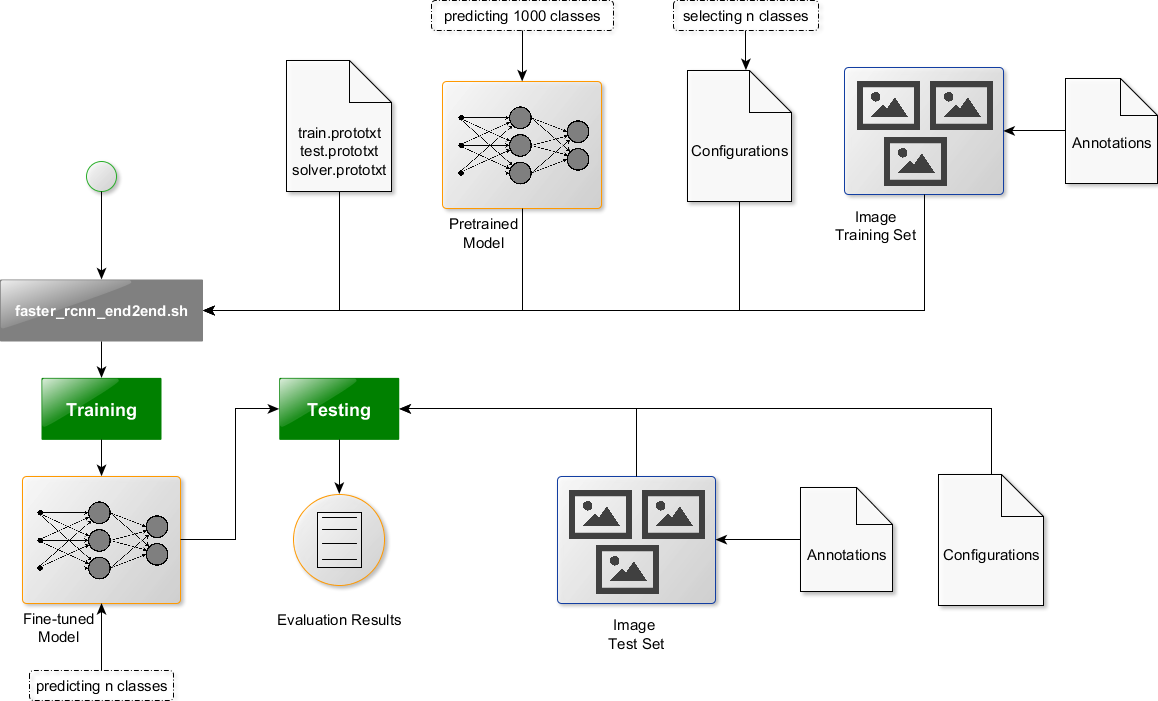

Fine-tuning deep CNN models on specific MS COCO categories

Fine-tuning of a deep convolutional neural network (CNN) is often desired. We aim at using fine-tuned image classification models for, e.g., interactive machine learning and multimodal interaction. To this end, we needed an easy-to-use tool for fine-tuning or re-training state-of-the-art deep neural networks on custom datasets or subsets of existing corpora.

py-faster-rcnn-ft

We forked the original version of py-faster-rcnn for adding changes relevant to our research. The py-faster-rcnn-ft software library can be used to fine-tune the VGG CNN M 1024 model on custom subsets of the Microsoft Common Objects in Context (MS COCO) dataset. For example, we improved the procedure so that the user does not have to look for suitable image files in the dataset by hand which can then be used in the demo program. Our Implementation randomly selects images that contain at least one object of the categories on which the model is fine-tuned.

py-faster-rcnn-ft is publicly available on Github.

Citation

If you use our software for a research project, we appreciate a reference to our corresponding paper (PDF).

BibTeX entry:

@article{Sonntag2017a,

title = {{Fine-tuning deep CNN models on specific MS COCO categories}},

author = {Sonntag, Daniel and Barz, Michael and Zacharias, Jan and Stauden, Sven and Rahmani, Vahid and Fóthi, Áron and Lőrincz, András},

archivePrefix = {arXiv},

arxivId = {1709.01476},

eprint = {1709.01476},

pages = {0--3},

url = {http://arxiv.org/abs/1709.01476},

year = {2017}

}